Advanced Micro Devices (AMD) is currently undergoing a significant market re-evaluation, driven by its strategic advancements in Artificial Intelligence (AI) accelerators and integrated computing systems. This shift has transformed Wall Street sentiment from skepticism to strong bullishness, as evidenced by recent analyst upgrades and increased price targets. The company’s commitment to an open AI ecosystem, anchored by its ROCm software platform and comprehensive rack-scale solutions, positions it as a compelling and viable alternative to Nvidia’s dominant, proprietary offerings. This approach is particularly appealing to hyperscale cloud providers and sovereign AI initiatives seeking diversification and greater interoperability within their AI infrastructure.

Looking ahead, AMD’s forthcoming Zen 6 Central Processing Units (CPUs) are poised to deliver substantial gains in clock speeds and Instructions Per Cycle (IPC), leveraging TSMC’s cutting-edge 2nm lithography. This aggressive technological push aims to solidify AMD’s performance leadership, especially within the high-performance gaming segment. In parallel, Intel’s Nova Lake architecture is emerging with a distinct strategy, emphasizing significantly higher core counts and a new platform. This indicates a focus on maximizing multi-threaded performance, likely targeting professional and data-intensive workloads. The broader market context reveals unprecedented and sustained capital expenditures by major tech giants in AI infrastructure. These multi-billion dollar investments underscore a fundamental, long-term demand shift for high-performance computing, creating a robust and expanding market for semiconductor providers like AMD.

The rapid succession of significant analyst upgrades and substantial price target increases for AMD signifies a fundamental re-evaluation of the company’s long-term growth trajectory. This shift indicates that the market is now recognizing AMD’s strategic pivot and execution in AI as a sustainable competitive advantage, moving beyond its previous perception as merely a traditional semiconductor play. This re-rating suggests that AMD’s AI strategy is not just theoretical but is beginning to yield tangible results and market acceptance, justifying a higher valuation multiple and a more optimistic long-term outlook.

Furthermore, the unprecedented scale of capital commitments from hyperscale cloud providers and sovereign entities for AI infrastructure signals that the current AI boom is not a cyclical phenomenon or a fleeting trend, but rather a fundamental, multi-year investment cycle. This will permanently reshape global IT infrastructure, ensuring sustained and substantial demand for high-performance chips and integrated systems well into the future.

AMD’s AI-Driven Market Resurgence

Recent Stock Performance and Analyst Sentiment

Advanced Micro Devices has recently experienced a notable shift in market perception, transitioning from a Wall Street skeptic to a favored investment. On a recent Monday, AMD’s stock advanced by 1%, closing at $129.58. This positive momentum was significantly amplified by a series of bullish analyst upgrades. Ben Reitzes, an analyst at Melius Research, notably upgraded AMD stock from “hold” to “buy,” simultaneously raising his price target from $110 to $175.1 This revised target implied a substantial 26% upside potential from the stock’s then-current trading price.1

Reitzes articulated his revised outlook in a client note, stating that “many things have changed for the better since the beginning of the year” for AMD, specifically highlighting “substantial advances in AI accelerators and computing systems”. He further anticipated “colossal GPU revenue upside ahead, especially with inferencing workloads taking off”.1 This perspective underscores a growing conviction that AMD’s strategic focus on AI is yielding tangible benefits and is poised for significant future growth.

Adding to this positive sentiment, CFRA analyst Angelo Zino also upgraded AMD, elevating his rating from “buy” to “strong buy” and increasing his price target from $125 to $165, which represented a 19% upside.1 These consecutive upgrades from prominent research firms underscore a collective recognition of AMD’s strengthened position in the burgeoning AI market. Despite broader “tech stocks under duress,” AMD’s rally has been conspicuous, leading to some discussion about a potential market “bubble.” However, market participants generally believe the company “still has plenty of gas left in the tank”.1

The observed outperformance of chip stocks over software stocks in the current AI cycle highlights a crucial investment theme: the foundational layer of AI infrastructure, specifically hardware, is currently perceived as the primary beneficiary of the AI boom.2 This suggests that companies are prioritizing the build-out of underlying “AI factories” before fully realizing the monetization of AI agents or Software-as-a-Service (SaaS) solutions.2 For investors, this indicates that the most immediate and potentially secure way to capitalize on the AI trend is by investing in the enablers of AI – the semiconductor manufacturers and infrastructure providers – as they are at the forefront of this foundational build-out.

AMD’s AI Accelerator Portfolio and Open Ecosystem

AMD is actively pursuing an “open-standards, rack-scale” strategy, aiming to deliver a comprehensive, “plug-and-play stack” for data centers.1 This integrated solution encompasses its 5th-generation EPYC high-performance CPUs, the new Instinct MI350 series of AI-focused GPUs, and Pensando Smart Network Interface Cards (NICs).1 This holistic approach is designed to provide a complete solution for complex AI workloads.

A cornerstone of this strategy is the AMD Instinct MI300X GPU Accelerator, which was officially released on December 6, 2023.3 This accelerator is engineered to meet the escalating demands of generative AI and high-performance computing (HPC) workloads. The MI300X boasts impressive specifications, including 304 GPU compute units, 192 GB of HBM3 memory with a substantial 5.3 TB/s bandwidth, and a processing power of 2.6 PFLOPS (FP8) per GPU.3 It is manufactured using TSMC’s 5nm and 6nm process technology.3 A significant competitive advantage of the MI300X lies in its memory capacity, which at 192 GB HBM3, is “well over double NVIDIA’s H100 SXM at 80 GB”.3 This substantial memory pool facilitates “faster model training and inference” and enables the training of “massive AI models without splitting them across multiple GPUs,” thereby reducing data movement overhead and simplifying deployments.3

The Instinct MI300A, another key component of the MI300 series, is distinguished as the “world’s first unified CPU/GPU accelerated processing unit (APU)”.4 This innovative design integrates AMD Zen4 CPUs and AMD CDNA™3 GPUs with shared unified memory, specifically engineered to overcome performance bottlenecks arising from narrow interfaces between CPU and GPU, programming overhead for data management, and the need for code modification across GPU generations.4 This unified architecture is designed for both small deployments and large-scale computing clusters.4 The MI325X, a newer offering in the MI300 series, further enhances capabilities with 256 GB HBM3E memory, 6 TB/s memory bandwidth, and a 1000W Thermal Design Power (TDP).4

At its “Advancing AI 2025” event on June 12, 2025, AMD unveiled its “comprehensive, end-to-end integrated AI platform vision” and officially introduced the AMD Instinct MI350 Series accelerators.5 The MI350 Series has demonstrated exceptional energy efficiency, achieving a “38x improvement” in AI training and high-performance computing nodes, significantly exceeding AMD’s five-year goal of 30x.5 AMD also provided a preview of “Helios,” its next-generation AI rack, which will be built on the forthcoming AMD Instinct MI400 Series GPUs. These GPUs are projected to deliver “up to 10x more performance running inference on Mixture of Experts models” compared to the previous generation and will integrate “Zen 6”-based AMD EPYC “Venice” CPUs.5

The AMD ROCm™ software platform is a foundational element of AMD’s strategy, continuously evolving to make it “easier for engineers to run AI apps on its gear”.1 ROCm is explicitly positioned as a “comprehensive game plan to reclaim market share in the AI space”.3 This open software ecosystem is crucial for attracting developers and ensuring broad compatibility for AMD’s hardware. This comprehensive approach has already yielded significant results, with AMD securing “a whopping $10 billion in commitments from major Middle East players in developing AMD-powered AI systems”.1The rapid product iteration and introduction of new AI accelerators (MI300X, MI350, MI325X, MI400) within a relatively short timeframe (December 2023 to June 2025 and beyond) demonstrate an aggressive, high-velocity commitment to closing the performance gap with Nvidia and capturing significant market share. This indicates a substantial allocation of research and development resources and a highly agile productization strategy. The “38x improvement” in energy efficiency for the MI350 is a critical long-term competitive advantage that directly addresses the escalating power consumption and cooling challenges prevalent in modern AI data centers.5 This positions AMD not just as a performance leader but also as a more sustainable and economically attractive solution for large-scale AI deployments, where operational costs are a major concern.

Competitive Dynamics in the AI Market: AMD vs. Nvidia

AMD is actively “gunning for a much bigger role in the AI arms race” 1, with its Instinct MI300X representing a “most determined effort to challenge NVIDIA’s near-monopoly in the AI GPU market”.3 At its June 12 event, AMD explicitly highlighted the “price and performance advantages of its AI data center systems over rival Nvidia (NVDA)” .

A key technical differentiator for AMD’s MI300X is its substantial 192 GB HBM3 memory, which is “well over double NVIDIA’s H100 SXM at 80 GB”.3 This memory advantage is particularly beneficial for “massive LLMs” and “memory-intensive applications,” allowing for faster model training and inference by reducing the need to split models across multiple GPUs.3 Conversely, Nvidia’s H100 maintains an edge in mixed-precision training (FP8 and FP16) and benefits significantly from its “mature CUDA software stack”.3

Morningstar’s analysis suggests that while “Nvidia is ahead of AMD on AI hardware, and perhaps still sizably ahead on AI software,” AMD is expected to “carve out a piece of the pie over time”.7 CFRA analyst Angelo Zino believes AMD is “closing the competitive gap to NVIDIA (NVDA) in 2026 as it launches the MI400x and prepares to shift to rack-scale solutions”.1

Crucially, large AI and cloud leaders, including Oracle, OpenAI (with founder Sam Altman making an appearance at AMD’s event), and AWS, are actively supporting AMD as an “external alternative to Nvidia’s dominant (but capable) solutions”.1 This support underscores a broader industry imperative to diversify supply chains and mitigate risks associated with reliance on a single dominant vendor. This strategic diversification provides AMD with significant customer validation and crucial market access. AMD’s ROCm software platform is positioned as a “comprehensive game plan to reclaim market share” 3 and is demonstrating “growing momentum” 5, further strengthening its appeal as an open alternative.

AMD’s strategy to differentiate itself through superior memory capacity (MI300X vs. H100) and a comprehensive, open, rack-scale solution directly targets the evolving needs of increasingly large-scale AI models and the desire of hyperscalers for integrated, flexible, and less proprietary systems.1 This signifies a strategic shift from component-level competition to a system-level value proposition. As AI models continue to grow exponentially in size, memory capacity becomes a critical bottleneck. Offering a solution that can handle larger models natively, combined with a full-rack system, simplifies deployment for large customers and addresses a pain point that Nvidia’s component-focused approach might not fully resolve. This elevates the competition from raw chip performance to total cost of ownership, ease of integration, and system-level efficiency for complex AI workloads.

| Category | AMD MI300X | NVIDIA H100 |

| Memory Capacity | 192 GB HBM3 (fits larger AI models natively) | 80 GB HBM3 (may require model splitting) |

| Compute Power | 304 GPU compute units | 132 SMs with Tensor Cores |

| AI Training | Faster for large models | Faster for small-to-mid models |

| AI Inference | Better for massive LLMs | Better for high-throughput inference |

| HPC Workloads | Excels in memory-heavy tasks | Better CUDA support |

| Ecosystem | Strong for AI and memory-bound workloads | Mature CUDA software stack |

| Power Efficiency | Lower power per large model | Lower power per small model3 |

The Future of Computing: AMD Zen 6 vs. Intel Nova Lake

AMD Zen 6: Architectural Ambitions and Performance Targets

AMD’s next-generation Zen 6 CPUs are slated for a 2026 launch, encompassing the “Venice” series for its EPYC server line and “Olympic Ridge” for Ryzen desktop processors.5 Leaked information suggests that AMD is targeting “insane” clock speeds “well above 6 GHz” for its Zen 6 Ryzen CPUs, with some speculation pointing towards clock rates of 6.4-6.5 GHz.8 It is notable that the same design team responsible for the highly successful Zen 4 architecture is reportedly working on Zen 6, a team known for “sandbagging expectations” (Zen 4 launched at 5.7 GHz despite earlier rumors of 5.0 GHz+).9 This historical pattern suggests that the final clock speeds could potentially exceed even the ambitious leaked targets.

Beyond raw clock speeds, Zen 6 aims for significant architectural improvements and higher performance per clock (IPC), with reported targets of 10-15% IPC gains.8 A critical enabler for these ambitious performance targets is AMD’s adoption of TSMC’s advanced 2nm lithography node (specifically N2X or N2) for Zen 6 Venice CPUs and Zen 6 Core Complex Dies (CCDs).8 This represents a substantial generational leap, described as “almost three process nodes” from the N4P node utilized for Zen 5.8 This aggressive leap to TSMC’s 2nm node represents a high-risk, high-reward manufacturing strategy. While it promises significant performance and efficiency gains, it also entails inherent risks associated with being an early adopter of a bleeding-edge process node, which could potentially impact yield rates, manufacturing costs, or time-to-market. However, if executed successfully, this bold move could establish a substantial competitive lead in raw performance.

Zen 6 CCDs are expected to feature 12 cores and 48MB of L3 cache, marking a 50% increase in both cores and L3 cache per CCD compared to previous generations.8 AMD also retains the capability to stack two layers of L3 V-Cache onto these CCDs 8, and the 3D V-Cache technology is anticipated to be implemented similarly to Zen 5.11 To enhance multi-CCD performance in desktop processors, AMD is introducing a new low-latency bridge connection between CCDs.11 The platform will also feature a new client I/O die for desktop (manufactured on Samsung 4LPP/4nm EUV) and updated memory controllers designed to support higher DDR5 speeds, potentially exceeding 10000 MT/s with a 1:2 clock divider and improved 1:1 speeds beyond DDR5-6400.11

The combination of “insane” clock speeds, increased IPC, higher core counts per CCD, and a new inter-CCD bridge suggests that AMD is not merely aiming for incremental improvements but pursuing a holistic architectural overhaul.8 This comprehensive approach is designed to maintain and extend its gaming performance lead while simultaneously addressing and enhancing multi-threaded workloads more effectively.

Intel Nova Lake: Core Count, Architecture, and Platform Shift

Intel’s Nova Lake architecture is projected for a 2026-2027 launch, succeeding the current Arrow Lake generation.12 This new architecture is expected to be accompanied by a significant platform change, moving to a new LGA-1954 socket. This marks a departure from the short-lived LGA-1851 socket, which will not be compatible with Nova Lake.13 Despite the increased pin count (1,954 pins), the LGA-1954 socket is designed to retain the same physical dimensions (45mm x 37.5mm) as LGA-1851, potentially allowing for backward compatibility with existing CPU coolers, pending mounting bracket support.13

Rumored core configurations for Nova Lake include a top-tier SKU with an impressive 52 cores, comprising 16 Performance “Coyote Cove” P-cores, 32 Efficiency “Arctic Wolf” E-cores, and 4 Low-Power Efficiency (LP-E) cores.13 This represents more than double the core count of the preceding Arrow Lake architecture.15 Other configurations are rumored to include 28 cores (8P+16E+4LPE) and 16 cores (4P+8E+4LPE).14

Nova Lake is expected to feature a modular chiplet design, with compute tiles potentially manufactured on Intel’s internal 14A process, while supporting tiles might be outsourced to external foundries like TSMC’s 2nm node.13 Intel’s co-CEO has confirmed that some parts of Nova Lake will be built externally, though the majority will remain in-house.14 The new LGA-1954 platform will support next-generation 900-series chipsets, bringing advancements such as Wi-Fi 7, Bluetooth 5.4, support for DDR5-6400 (or faster) memory, Thunderbolt 5/USB4 v2, and enhanced PCIe 5.0 with future PCIe 6.0 readiness.13

Nova Lake is also likely to integrate dedicated AI accelerators (NPUs) designed for emerging workloads like real-time video filters and generative AI applications, extending the CPU’s utility beyond traditional gaming and productivity.13 Furthermore, it may include a dual-architecture GPU tile, which could potentially render discrete graphics obsolete in certain segments.12 The 52-core die is potentially designated for both desktop and high-performance laptop (HX-grade) SKUs.12 The Thermal Design Power (TDP) is allegedly 150W, though the peak TDP is anticipated to be considerably higher.15

Intel’s decision to introduce a new socket (LGA-1954) for Nova Lake after only one generation of LGA-1851 signals a strategic prioritization of architectural leaps and higher power delivery for increased core counts and integrated AI features.13 This approach contrasts sharply with AMD’s more stable AM5 platform, potentially impacting consumer upgrade convenience and platform longevity. The rumored 52-core configuration with a sophisticated hybrid architecture (P-cores, E-cores, LP-E cores) and a dual-architecture GPU tile indicates Intel’s aggressive pursuit of multi-threaded performance and highly integrated AI/graphics capabilities.12 This positions Nova Lake as a strong contender for professional workloads, content creation, and potentially reducing the need for discrete GPUs in some mainstream segments.

Head-to-Head: Anticipated Performance and Market Positioning

The upcoming generation of CPUs from both AMD and Intel presents a fascinating competitive landscape, with each company appearing to refine its distinct performance strategy. AMD’s Zen 6 is targeting “well above 6 GHz” clock speeds and 10-15% IPC gains, indicating a focus on maximizing single-threaded performance and overall responsiveness.8 In contrast, Intel’s Nova Lake is rumored to feature an impressive core count of up to 52, alongside 9-18% IPC gains.10

According to leaker Moore’s Law Is Dead, Intel could achieve an approximate 11% lead over AMD in multi-threaded performance, primarily attributable to Nova Lake’s significantly higher core count.10 This suggests that for highly parallelized workloads, such as video rendering, scientific simulations, or complex software compilation, Intel’s offerings might provide a performance advantage.

However, AMD is widely anticipated to maintain its gaming performance lead.10 This is largely due to the nature of gaming workloads, which tend to be less multi-threaded and benefit significantly from higher clock speeds, IPC, and especially AMD’s 3D V-Cache technology.10 For context, AMD’s Ryzen 9 9800X3D is currently recognized as the “fastest gaming chip money can buy,” while Intel’s Arrow Lake has lagged comparably priced competing chips in gaming performance.16 The Ryzen 7 7800X3D is also highlighted as the “best AMD processor on the market for gamers” due to its outstanding performance.17

Therefore, the market positioning for these next-generation CPUs appears to be bifurcated. Professionals and users with heavily multi-threaded application needs will likely find Intel’s Nova Lake, with its high core counts, a compelling option.10 Conversely, gamers and enthusiasts prioritizing single-core performance and raw clock speed for the best in-game frame rates will likely continue to favor AMD’s Zen 6 architecture, particularly with the expected enhancements to its 3D V-Cache technology.10 This dynamic suggests a competitive environment where both companies carve out strong positions based on their distinct architectural strengths and target market segments.

Conclusions

Advanced Micro Devices is undergoing a significant transformation, driven by its strategic advancements in AI accelerators and integrated computing systems. The recent surge in analyst upgrades and substantial price target increases for AMD stock underscore a fundamental re-evaluation of the company’s long-term growth trajectory. This shift indicates that the market is now recognizing AMD’s strategic pivot and execution in AI as a sustainable competitive advantage, moving beyond its previous perception as merely a traditional semiconductor play. The outperformance of chip stocks over software stocks in the current AI cycle further emphasizes that the foundational layer of AI infrastructure is currently perceived as the primary beneficiary of the AI boom, indicating a robust and predictable revenue stream for key semiconductor players.

AMD’s aggressive “open-standards, rack-scale” strategy, centered around its Instinct MI300 series accelerators and the growing ROCm software ecosystem, positions it as a compelling and viable alternative to Nvidia’s proprietary solutions. The superior memory capacity of the MI300X, coupled with the impressive energy efficiency of the MI350, directly addresses critical needs of hyperscale cloud providers and sovereign AI initiatives. The active support from major tech partners like OpenAI, Oracle, and AWS for AMD as an “external alternative” to Nvidia underscores a broader industry imperative to diversify supply chains and mitigate risks associated with reliance on a single dominant vendor. This strategic diversification provides AMD with significant customer validation and crucial market access, elevating the competition from raw chip performance to total cost of ownership, ease of integration, and system-level efficiency for complex AI workloads.

Looking ahead to the CPU landscape, AMD’s Zen 6 architecture, slated for 2026, is poised to deliver substantial gains in clock speeds (“well above 6 GHz”) and IPC, leveraging TSMC’s cutting-edge 2nm lithography. This aggressive manufacturing leap, while carrying inherent risks, aims to establish a significant competitive lead in raw performance. The combination of high clock speeds, increased IPC, higher core counts per CCD, and a new inter-CCD bridge suggests a holistic architectural overhaul designed to maintain and extend AMD’s gaming performance leadership while also enhancing multi-threaded capabilities.

Conversely, Intel’s Nova Lake architecture, projected for 2026-2027, signals a distinct strategy focused on maximizing multi-threaded performance. With rumored configurations of up to 52 cores and a sophisticated hybrid architecture, Nova Lake is positioned as a strong contender for professional workloads and content creation. Intel’s decision to introduce a new LGA-1954 socket for Nova Lake, despite its short-lived predecessor, underscores a strategic prioritization of architectural leaps and higher power delivery for increased core counts and integrated AI features. This approach, while potentially impacting consumer upgrade convenience, aims to deliver bleeding-edge performance and highly integrated AI/graphics capabilities.

In conclusion, the competitive landscape for high-performance computing is evolving into a bifurcated market. AMD appears set to maintain its lead in gaming and single-threaded performance with Zen 6’s high clock speeds and 3D V-Cache technology. Intel, with Nova Lake’s significantly higher core counts and integrated AI/graphics, is likely to capture a strong position in multi-threaded professional workloads. Both companies are making substantial technological advancements, ensuring sustained and substantial demand for high-performance chips and integrated systems well into the future, driven by the unprecedented investments in AI infrastructure.

References

- Analyst reboots AMD stock price target on chip update – TheStreet, accessed June 26, 2025, https://www.thestreet.com/technology/amd-stock-pops-as-wall-street-raises-the-bar

- Chip stocks are beating software stocks this year, but here’s why both stand to win as AI demand grows | Morningstar, accessed June 26, 2025, https://www.morningstar.com/news/marketwatch/20250625202/chip-stocks-are-beating-software-stocks-this-year-but-heres-why-both-stand-to-win-as-ai-demand-grows

- AMD MI300X Accelerator Unpacked: Specs, Performance, & More – TensorWave, accessed June 26, 2025, https://tensorwave.com/blog/mi300x-2

- AMD Instinct™ MI300 Series Platform | Solution – GIGABYTE Global, accessed June 26, 2025, https://www.gigabyte.com/Solutions/amd-instinct-mi300

- AMD Unveils Vision for an Open AI Ecosystem, Detailing New Silicon, Software and Systems at Advancing AI 2025 – Investor Relations, accessed June 26, 2025, https://ir.amd.com/news-events/press-releases/detail/1255/amd-unveils-vision-for-an-open-ai-ecosystem-detailing-new-silicon-software-and-systems-at-advancing-ai-2025

- 25+ AI Data Center Statistics & Trends (2025 Updated) – The Network Installers, accessed June 26, 2025, https://thenetworkinstallers.com/blog/ai-data-center-statistics/

- AMD: “Advancing AI” Event Shows a Promising Roadmap – Morningstar, accessed June 26, 2025, https://www.morningstar.com/stocks/amd-advancing-ai-event-shows-promising-roadmap

- AMD Zen 6 Leaks points towards 6+ GHz clock speeds for Ryzen – OC3D, accessed June 26, 2025, https://overclock3d.net/news/cpu_mainboard/amd-zen-6-leaks-points-towards-6-ghz-clock-speeds-for-ryzen/

- AMD’s next Zen 6 processors teased with ‘well over’ 6.0GHz CPU clocks – TweakTown, accessed June 26, 2025, https://www.tweaktown.com/news/105935/amds-next-zen-6-processors-teased-with-well-over-0ghz-cpu-clocks/index.html

- AMD Zen 6 clock speed target of 6GHz+ is “very conservative” according to leak | Club386, accessed June 26, 2025, https://www.club386.com/amd-zen-6-clock-speed-target-of-6ghz-is-very-conservative-according-to-leak/

- AMD Zen 6 Powers “Medusa Point” Mobile and “Olympic Ridge” Desktop Processors, accessed June 26, 2025, https://www.techpowerup.com/forums/threads/amd-zen-6-powers-medusa-point-mobile-and-olympic-ridge-desktop-processors.332583/

- Intel’s powerful 2026 Nova Lake chip could render discrete graphics …, accessed June 26, 2025, https://www.laptopmag.com/laptops/windows-laptops/intels-2026-nova-lake-chip-leak-laptops

- Intel Nova Lake CPUs Will Use New LGA-1954 Platform, Confirmed …, accessed June 26, 2025, https://pcoutlet.com/parts/cpus/intel-nova-lake-cpus-will-use-new-lga-1954-platform-confirmed-for-2026

- Intel’s Nova Lake CPU reportedly has up to 52 cores — Coyote …, accessed June 26, 2025, https://www.tomshardware.com/pc-components/cpus/intels-nova-lake-cpu-reportedly-has-up-to-52-cores-coyote-cove-p-cores-and-arctic-wolf-e-cores-onboard

- Next-gen Intel Core gaming CPU range set to have up to 52 cores …, accessed June 26, 2025, https://www.pcgamesn.com/intel/nova-lake-52-core-leak

- CPU Benchmarks and Hierarchy 2025: CPU Rankings – Tom’s Hardware, accessed June 26, 2025, https://www.tomshardware.com/reviews/cpu-hierarchy,4312.html

- The best processors in 2025: top CPUs from AMD and Intel – TechRadar, accessed June 26, 2025, https://www.techradar.com/news/best-processors

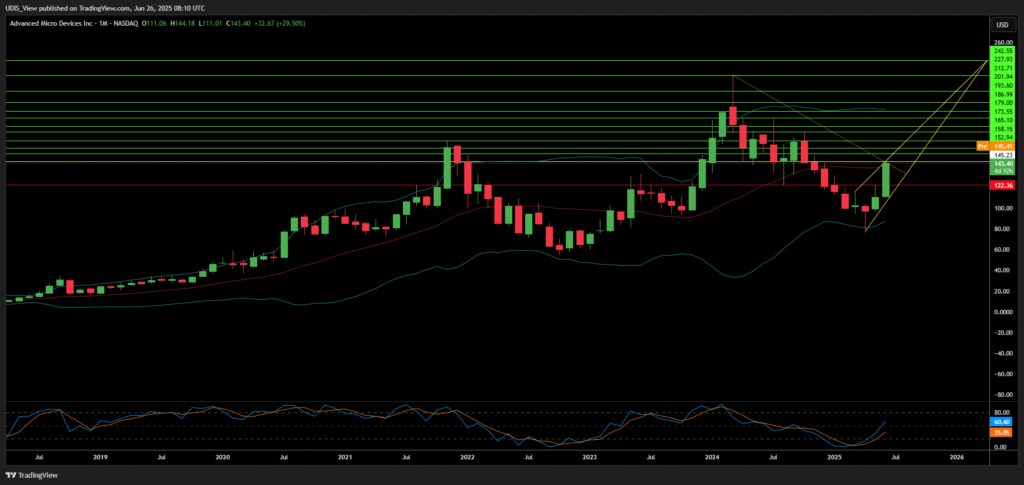

AMD Long (Buy)

Enter At: 145.23

T.P_1: 152.94

T.P_2: 158.15

T.P_3: 165.10

T.P_4: 173.55

T.P_5: 179.00

T.P_6: 186.99

T.P_7: 193.60

T.P_8: 201.94

T.P_9: 212.71

T.P_10: 227.93

T.P_11: 242.55

S.L: 122.36